-

Program testing goals

- To demonstrate to the developer and the customer that the software meets its requirements.

- For custom software, this means that there should be at least one test for every requirement in the requirements document. For generic software products, it means that there should be tests for all of the system features, plus combinations of these features, that will be incorporated in the product release.

- To discover situations in which the behavior of the software is incorrect, undesirable or does not conform to its specification.

- Defect testing is concerned with rooting out undesirable system behavior such as system crashes, unwanted interactions with other systems, incorrect computations and data corruption.

Testing

- 2 aspects to testing:

- Planning this activity

- Carrying the activity out

- Note this will draw on/enhance your experience in other modules.

- The testing process is primarily concerned with finding defects within a piece of software.

- Testing can only show the presence of errors, not there absence.

- Useful link: 📹 A beginners guide to testing

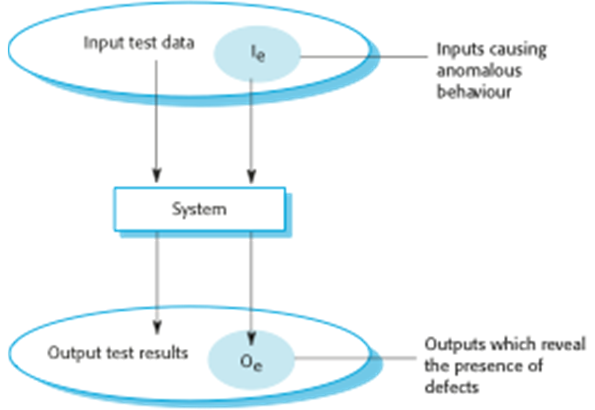

Program testing

- Testing is intended to show that a program does what it is intended to do and to discover program defects before it is put into use.

- When you test software, you execute a program using artificial data.

- You check the results of the test run for errors, anomalies or information about the program’s non-functional attributes.

- Can reveal the presence of errors NOT their absence.

- Testing is part of a more general verification and validation process, which also includes static validation techniques.

X -

Verification and Validation

- Validation

Are we building the right product?- Verification

Are we building the product right?Verification

- The aim of verification is to check that the software meets its stated functional and non functional requirements.

Validation

- The aim of validation is to ensure that the product meets the customers expectations

Validation and defect testing

- The first goal leads to validation testing

- You expect the system to perform correctly using a given set of test cases that reflect the system’s expected use.

- The second goal leads to defect testing

- The test cases are designed to expose defects. The test cases in defect testing can be deliberately obscure and need not reflect how the system is normally used.

Testing process goals

- Validation testing

- To demonstrate to the developer and the system customer that the software meets its requirements

- A successful test shows that the system operates as intended.

- Defect testing

- To discover faults or defects in the software where its behaviour is incorrect or not in conformance with its specification

- A successful test is a test that makes the system perform incorrectly and so exposes a defect in the system.

- How do you know that you have a defect in the software that you are developing? You find it by testing!

- 📷 An input-output model of program testing

X

- Validation

-

Inefficiencies

if (a <= 0) { // do something } if (a > 0) { // do something else } if (a <= 0) { // do something } else { // do something else }Testing

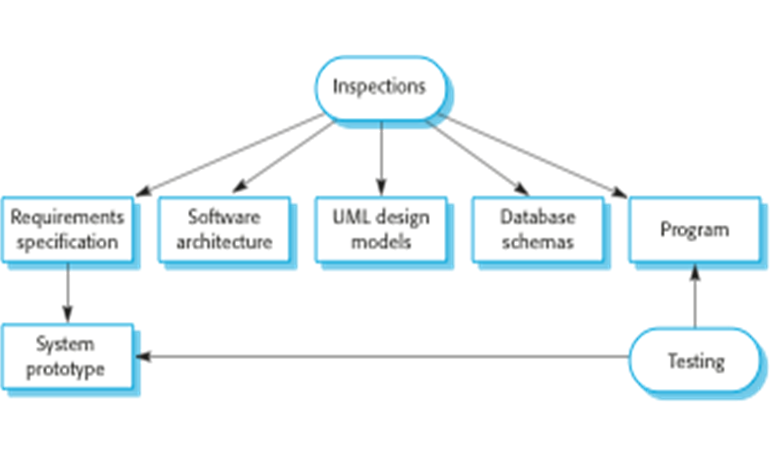

- Inspections may not find problems with:

- Interactions between systems

- Timing problems

- System performance

- 📷 Inspections and testing

- 📷 A model of the software testing process

Inspections and Reviews

- This involves “reading” any readable representation of the software such as the source code and specification.

- 📷 Inspections

Source Code

- Reading through the source code can reveal errors of various kinds. Compare this with the pair programming approach used in some versions of Agile development.

Source Code inspections

- This activity may help to find:

- Semantic errors

- Inefficiencies

- Algorithm use

- Poor programming style (may affect maintainability of the system and lead to difficulty in performing updates)

Semantic/Logic Error

double x; double b; double c; double a; x = -b + Math.sqrt(((b*b) – 4*a*c)/2*a);

- Where is the possible logical error here?

X X

X

-

Stages of testing

- Development testing, where the system is tested during development to discover bugs and defects.

- Release testing, where a separate testing team test a complete version of the system before it is released to users.

- User testing, where users or potential users of a system test the system in their own environment.

Testing

- 3 stages of testing can be identified:

- Developmental testing

- Release testing

- User testing

Developmental Testing

- This sub phase consists of:

- Unit testing

- Component Testing

- System Testing

Development testing

- Development testing includes all testing activities that are carried out by the team developing the system.

- Unit testing, where individual program units or object classes are tested. Unit testing should focus on testing the functionality of objects or methods.

- Component testing, where several individual units are integrated to create composite components. Component testing should focus on testing component interfaces.

- System testing, where some or all of the components in a system are integrated and the system is tested as a whole. System testing should focus on testing component interactions.

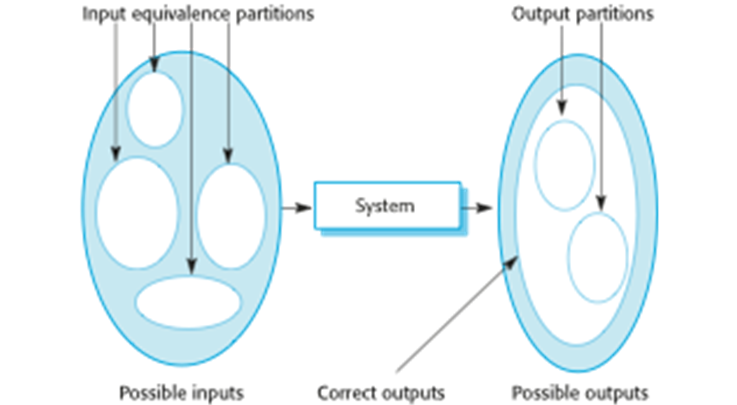

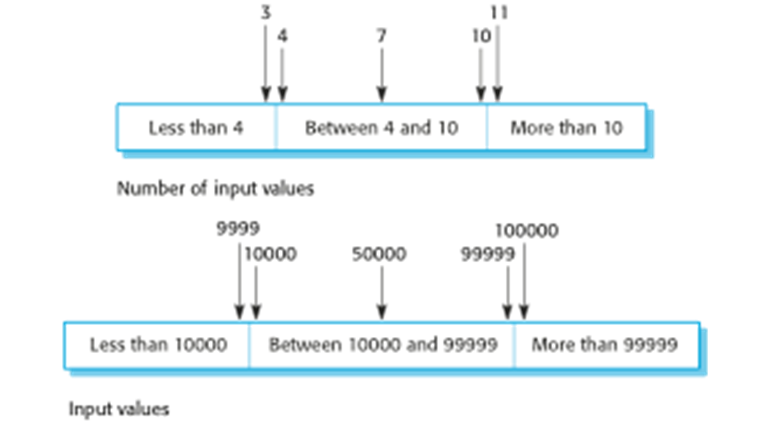

Choosing software test case

- A short introduction to this approach to choosing software test cases.

- 📹 Equivalence partitioning and boundary value analysis

X -

The weather station-case study

- This case study is based on the software for a wilderness weather station that collects weather information in remote areas that do not have local infrastructure (power, communications, roads, etc.).

- These weather stations are part of a national weather information system that is intended to capture and analyse detailed weather information to help understand both local and national climate change and to support the national weather forecasting service.

- The important characteristic of the weather station is that it has to be entirely self-contained as power and communications are not available.

- It may also be deployed far from a road so access for repairs is difficult and expensive.

- This means that the system has to have its own power generation capability (using either solar panels or wind power), communicates via satellite and must be able to reconfigure itself to cope with problems with the instruments and software upgrades.

- The software is embedded in that it is part of a wider hardware/software system but the timing requirements are such that very fast responses are not required.

- Therefore, it need not be based on one of the architectural styles for real-time systems discussed in the book of Software Engineering.

- 💡 The weather station object interface

Weather station testing

- Need to define test cases for reportWeather, calibrate, test, startup and shutdown.

- Using a state model, identify sequences of state transitions to be tested and the event sequences to cause these transitions

- For example:

- Shutdown -> Running-> Shutdown

- Configuring-> Running-> Testing -> Transmitting -> Running

- Running-> Collecting-> Running-> Summarizing -> Transmitting -> Running

X- For example:

Shutdown -> Running-> Shutdown

Configuring-> Running-> Testing -> Transmitting -> Running

Running-> Collecting-> Running-> Summarizing -> Transmitting -> RunningWeatherStation identifier reportWeather()

reportStatus()

powerSave (instruments)

remoteControl (commands)

reconfigure (commands)

restart (instruments)

shutdown (instruments) -

Automated Test

- Part 1 A set up part where you initialize the system with the test case, namely the inputs and expected outputs.

- Part 2 A call part where you call the object or method to be tested.

- Part 3 An assertion part where you compare the result of the call with the expected result. If the assertion evaluates to true, the test has been successful; if false then it has failed.

The weather station object interface

WeatherStation identifier reportWeather()

reportStatus()

powerSave (instruments)

remoteControl (commands)

reconfigure (commands)

restart (instruments)

shutdown (instruments)Automated testing

- Whenever possible, unit testing should be automated so that tests are run and checked without manual intervention.

- In automated unit testing, you make use of a test automation framework (such as JUnit) to write and run your program tests.

- Unit testing frameworks provide generic test classes that you extend to create specific test cases. They can then run all of the tests that you have implemented and report, often through some GUI, on the success of otherwise of the tests.

Automated test components

- A setup part, where you initialize the system with the test case, namely the inputs and expected outputs.

- A call part, where you call the object or method to be tested.

- An assertion part where you compare the result of the call with the expected result. If the assertion evaluates to true, the test has been successful if false, then it has failed.

Unit Testing

- This is the process of testing program components, such as methods or object classes. You should design your tests to provide coverage of all the features of the object.

- You should

- Test all operations associated with the object

- Set and check the value of all attributes associated with the object

- Put the object into all possible states.

-

Equivalence partitioning

Equivalence partitions

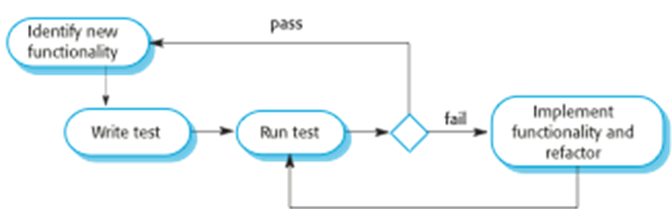

Test-driven development

- 📹 Test-driven development

Benefits of Test Driven Development

- Code Coverage

- Regression Testing

- Simplified Debugging

- System Documentation

X -

Regression Testing

- This involves running test sets that have successfully executed after changes have been made to a system

- The regression test checks that these changes have not introduced new bugs into a system and that the new code interacts as expected with the existing code.

Release Testing

- This type of testing occurs when you have something worth releasing to a customer. It consists of:

- Requirements Based Testing

- Scenario testing

- Performance Testing

A usage scenario for the MHC-PMS

- Kate is a nurse who specializes in mental health care. One of her responsibilities is to visit patients at home to check that their treatment is effective and that they are not suffering from medication side -effects. On a day for home visits, Kate logs into the MHC-PMS and uses it to print her schedule of home visits for that day, along with summary information about the patients to be visited. She requests that the records for these patients be downloaded to her laptop. She is prompted for her key phrase to encrypt the records on the laptop. One of the patients that she visits is Jim, who is being treated with medication for depression. Jim feels that the medication is helping him but believes that it has the side -effect of keeping him awake at night. Kate looks up Jim’s record and is prompted for her key phrase to decrypt the record. She checks the drug prescribed and queries its side effects. Sleeplessness is a known side effect so she notes the problem in Jim’s record and suggests that he visits the clinic to have his medication changed. He agrees so Kate enters a prompt to call him when she gets back to the clinic to make an appointment with a physician. She ends the consultation and the system re-encrypts Jim’s record. After, finishing her consultations, Kate returns to the clinic and uploads the records of patients visited to the database. The system generates a call list for Kate of those patients who she has to contact for follow-up information and make clinic appointments.

User Testing

- In practice there are 3 types of User Testing

- Alpha Testing

- Beta testing

- Acceptance Testing

-

Alpha Testing VS Beta testing

Alpha Test Beta Test What they do Improve the quality of the product and ensure beta readiness Improve the quality of the product, integrate customer input on the complete product, and ensure release readiness When they happen Towards the end of a development process when the product is in a near fully-usable state. Just prior to launch, sometimes ending within weeks or even days of final release How long they last Usually very long and see many iterations. It's not uncommon for alpha to last 3-5x the length of beta. Usually only a few weeks (sometimes up to a couple of months) with few major iterations. Who cares about it Almost exclusively quality/engineering

(bugs, bugs, bugs).Usually involves product marketing, support, docs, quality and engineering (basically the entire product team). Who participates (tests) Normally performed by test engineers, employees, and sometimes "friends and family". Focuses on testing that would emulate ~ 80% of the customers. Tested in the "real world" with "real customers" and the feedback can cover every element of the product What testers should expect Plenty of bugs, crashes, missing docs and features. Some bugs, fewer crashes, most docs, feature complete. How they're addressed Most known critical issues are fixed, some features may change or be added as a result of early feedback. Much of the feedback collected is considered for and/or implemented in future versions of the product. Only important/critical changes are made. What they achieve About methodology, efficiency and regiment. A good alpha test sets well defined benchmarks and measures a product against those benchmarks. About chaos, reality and imagination. Beta tests explore the limits of a product by allowing customers to explore every element of the product in their native environments. When it's over You have a decent idea of how a product performs and whether it meets the design criteria (and if it's "beta-ready"). You have a good idea of what your customer thinks about the product and what s/he is likely to experience when they purchase it. What happens next Beta Test! Release Party - Data source: https://www.centercode.com/blog/2011/01/alpha-vs-beta-testing/

-

The acceptance testing process

Black Box Testing

- Black-box testing is a method of software testing that examines the functionality of an application without peering into its internal structures or workings. This method of test can be applied to virtually every level of software testing: unit, integration, system and acceptance.

White Box Testing

- White-box testing is a method of testing software that tests internal structures or workings of an application, as opposed to its functionality. In white-box testing an internal perspective of the system, as well as programming skills, are used to design test cases. The tester chooses inputs to exercise paths through the code and determine the appropriate outputs. This is analogous to testing nodes in a circuit, e.g. in-circuit testing.

Testing policies

- Exhaustive system testing is impossible so testing policies which define the required system test coverage may be developed.

- Examples of testing policies:

- All system functions that are accessed through menus should be tested.

- Combinations of functions (e.g. text formatting) that are accessed through the same menu must be tested.

- Where user input is provided, all functions must be tested with both correct and incorrect input.

-

Key Points

- Testing can only show the presence of errors in a program. It cannot demonstrate that there are no remaining faults.

- Development testing is the responsibility of the software development team. A separate team should be responsible for testing a system before it is released to customers.

- Development testing includes unit testing, in which you test individual objects and methods component testing in which you test related groups of objects and system testing, in which you test partial or complete systems.

- When testing software, you should try to ‘break’ the software by using experience and guidelines to choose types of test case that have been effective in discovering defects in other systems.

- Wherever possible, you should write automated tests. The tests are embedded in a program that can be run every time a change is made to a system.

-

Glossary

ABCFMPRSTUVW

A

acceptance testing

- Customer tests of a system to decide if it is adequate to meet their needs and so should be accepted from a supplier.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.757

Return to TopB

black-box testing

- An approach to testing where the testers have no access to the source code of a system or its components. The tests are derived from the system specification.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.758

Return to TopC

critical system

- A computer system whose failure can result in significant economic, human or environmental losses.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.761

Return to TopF

fault avoidance

- Developing software in such a way that faults are not introduced into that software.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.763

fault detection

- The use of processes and run-time checking to detect and remove faults in a program before these results in a system failure.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.763

fault tolerance

- the ability of a system to continue in execution even after faults have occurred.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.763

fault-tolerant architectures

- System architectures that are designed to allow recovery from software faults. There are based on redundant and diverse software components.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.763

Return to TopM

mean time to failure (MTTF)

- The average time between observed system failures. Used in reliability specification.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.765

Return to TopP

pair programming

- A development situation where programmers work in pairs, rather than individually to develop code. A fundamental part of extreme programming.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.767

program inspection

- A review where a group of inspectors examine a program, line by line, with the aim of detecting program errors. A check list of common programming errors often drives inspections.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.768

Return to TopR

rate of occurrence of failure (ROCOF)

- A reliability metric that is based on the number of observed failures of a system in a given time period.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.768

reliability growth modeling

- the development of a model of how the reliability of a system changes (improves) as it is tested and program defects are removed.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.769

resilience

- A judgement of how well a system can maintain the continuity of its critical services in he presence of disruptive events, such as equipment failure and cyberattacks.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.769

Return to TopS

scenario testing

- An approach to software testing where test cases are derived from a scenario of system use.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.770

system testing

- The testing of a completed system before it is delivered to customers.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.772-773

systems engineering

- A process that is concerned with specifying a system, integrating its components and testing that the system meets its requirements. System engineering is concerned which the whole socio-technical system-software , hardware and operational processes - not just the system software.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.774

Return to TopT

test coverage

- The effectiveness of system tests in testing the code of an entire system. Some companies have standards for test coverage e.g. the system tests shall ensure that all program statements are executed at least once.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.773

test-driven development

- An approach to software development where executable tests are written before the program code. The set to tests are run automatically after every change to the program.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.773

Return to TopU

unit testing

- The testing of individual program units by the software developer or development team.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.773

user interface design

- The process of designing the way in which system users can access system functionality, and the way that information produced by the system is displayed.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.774

user story

- A natural language description of a situation that explains how a system or systems might be used and the interactions with the systems that might take place.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.774

Return to TopV

Validation

- The process of checking that a system meets the needs and expectations of the customer.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.774

verification

- The process of checking that a system meets its specification.

- Sommerville I. 2016 "Software Engineering" 10th Edition. Pearson Pp.776

Return to TopW

white-box testing

- An approach to program testing where the tests are based on knowledge of the structure of the program and its components. Access to source code is essential for white-box testing.

- Sommerville I. 2016"Software Engineering" 10th Edition. Pearson Pp.774

wilderness weather system

- A system to collect data about the weather conditions in remote areas.

- Sommerville I. 2016"Software Engineering" 10th Edition. Pearson Pp.774

Return to Top