-

Threads

- In this week, we will cover the following topics:

- Motivation and uses of threads

- Multi-threaded Processes

- POSIX Threads

- Thread Safety

- Thread Re-entrancy

- Threads with sockets

-

10.1 Processes and Threads

A process is defined as an executing image of a program including its data and register values and stack. It executes in a virtual machine environment and has system resources allocated to it (memory, files, I/O devices...). “The program runs within the process”. To the process, it looks like it has the machine to itself. This is important – remember it!

A thread is a concept associated with a process. Essentially a thread is an independently schedulable execution path through a process (more later). Consider at the moment that processes have one thread only.

-

10.2 Threads

Consider that each process has state information associated with it: page tables, file descriptors, outstanding I/O requests, saved register values etc. The volume of state information makes it a large overhead to create and maintain processes as well as to switch between them. Also consider that the issue of the protection of address spaces between concurrently executing processes is generally a good thing; in some circumstances, we may want share memory between processes but this is not the norm

10.2.1 A Multi-threaded process

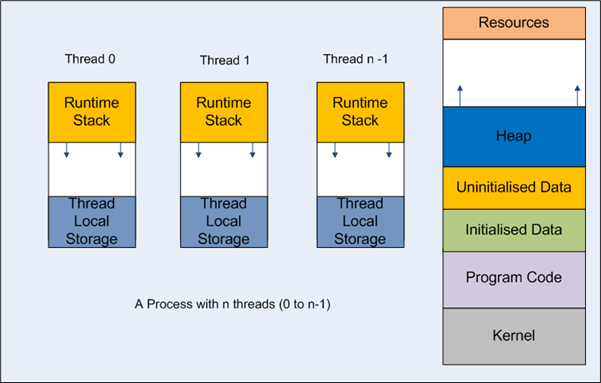

Figure 1: Multi-threaded process Threads are an attempt to reduce the overhead when process creation, maintenance and context switching occurs frequently. Thread creation is 100th to 1000th of the expense of process creation. Threads are used for three main reasons; 1) to exploit multi-core architectures in which threads map closely to the underlying topology of the hardware architecture; 2) to alleviate long blocks in processes by allowing the application to continue processing e.g. when part of the process logic is waiting on I/O completion on one thread but the user interface can remain responsive on another; 3) to simplify programming in certain applications i.e. the algorithm lends itself to a concurrent solution e.g. the garbage collection thread in the Java JRE and .NET CLR.

A single thread executes independently a portion of a process, co-operating with other threads executing in the same address space. Once created, all threads are collectively multi-tasked. Threads may execute different code blocks or the same ones depending on the application. Threads have separate program counters, stacks and some register values but share the process's address space (hence allowing shared global variables), file descriptors, etc. So, context switching between threads in the same process is a smaller overhead than context switching between processes; creating new threads in an existing process is also a much smaller overhead than creating a new process.

Each thread has a separate run-time stack, i.e., separate local variables on a per-thread basis, separate stack pointer, but share everything else (global variables are shared variables, the heap etc.). However, some thread implementations provide a mechanism to have global variables unique to a specific thread and shared across all the code blocks it runs, so the “global” scope is the thread rather than the process.

Barrier Synchronisation: A group of threads stops at a certain point until all other threads reach the barrier one simple example is a join where a parent thread waits until child thread(s) complete by executing a join() method (see Lab 8 material).

- API: Threads will have their own API. POSIX threads is the standardised thread library on Linux/UNIX used in our lab work/coursework with a port to Windows. There are native .NET threads classes. The POSIX Thread (pthread) Library is a standardised threads library on UNIX/Linux with a C binding offering pretty much the same functionality as other thread libraries including:

- thread creation

- synchronisation

- POSIX Thread is implemented in a library:

- However, usually cc uses a special switch -pthread which expands out to:

- -D_REENTRANT -lpthread

- -D_REENTRANT causes the compiler to use reentrant versions of several functions in the standard library.

Note that “non-threaded” processes can be viewed as single-threaded processes!. Some API libraries and all GUIs are implicitly multithreaded (you can’t escape threads!). Process specific caches which accumulate during execution are usually flushed on a process context switch. Threads from a single process can utilise the same cache. On single CPU architectures, threads are just multitasked; on multi-core processors, it is possible to run the threads concurrently and if the number of threads is greater than the number of cores, then you can multitask the threads between the available cores.

-

10.3 Concurrency Issues

Concurrency problems are introduced as a consequence of interrupts; remember scheduling is driven by timer interrupts; context switches can take place between any two statements unpredictably. In fact it’s worse than this - each high level language construct maps to one or more machine instruction and it is these that are unpredictably interleaved. This can lead to occasional erroneous results resulting in hard to debug programs.

The erroneous behaviour introduced is called a race condition. Ensuring that only one process or thread at a time can gain access. Serial access, to a shared variable is called mutual exclusion. Code that is deliberately written to correctly avoid race conditions is called safe code.

-

10.4 Thread Safety

- Although this is a problem that can happen with concurrent processes e.g. using shared memory, it is a problem you hit immediately in multi-threading. Variables with program-wide scope (global) are implicitly shared. “A piece of code is thread-safe if it only manipulates shared data structures in a manner that guarantees safe execution by multiple threads at the same time.” (Wikipedia). At first glance a solution to the problem may appear obvious:

- identify the areas of code which access the shared variable, called a critical section

- make sure only one process or thread is in a critical section at a time

However the problem can be sidestepped completely by avoiding writes to shared global and static variables. Local variables are allocated on the stack and there is a separate stack for each thread so no issues...

10.4.1 Mutexes

A mutex can be used to guarantee that only one thread can enter a critical section at a time. See:

🔗 http://www.yolinux.com/TUTORIALS/LinuxTutorialPosixThreads.html

However, mutexes are locks and an overhead that should be used with care; if used wrongly your code can deadlock.

10.4.2 Reentrancy Issues

A more advanced issue caused by interrupt (signal handling) and sometimes recursion, can create complex synchronisation problems. What happens if there’s an interrupt and the interrupt handler decides to run the same function on another thread in the middle of the first's execution? A properly written reentrant function can be interrupted at any time and resumed at a later time without loss of data.See:

🔗 http://www.ibm.com/developerworks/linux/library/l-reent/index.html

Be aware of the issues and the guidance to avoid reentrancy problems but we do not need to consider this further. Note it also impacts library code which should be written to be re-entrant; sometimes reentrant and non-reentrant versions of library functions exist; reentrant code must use reentrant library functions.

- Summary

- Thread-safe code can be called simultaneously by multiple threads when each thread references shared data. It is a condition that all access to the shared variables must be safely serialised.

- Reentrant code can be called simultaneously by multiple threads provided that each thread references local variables only and is written with care.

-

10.5 Putting it all together...

- A stream sockets server can be improved by introducing threads:

- main thread retains same code up to listen() then accept() is put in an infinite loop

- on accept() a thread is spawned to handle the new client connection and socket

- multiple clients can therefore be connected at once each on a separate thread with (if properly coded) isolated data

- the thread dies when the connection is dropped

- a signal handler does the cleanup in the server (typically on <ctrl> c)

- the client code remains the same

- See Lab 8 threads-socket-server program.

- The coursework server should be based on this.

-

10.6 Miscellaneous Examples

- Code only:

- stat: using the stat system call to query inodes

- scandir: alternative way to process directories

- fork: creating processes with fork() and exec()

- pipes: interprocess communication with anonymous pipes

-

10.7 Final Lab Material

- This must all have been tried as practical work on Linux in the labs:

- Reviewed code from Lab 8 (threads directory):

- creating threads (pthread1)

- interleaved execution (interleaving)

- basic synchronisation (join1 and mutex1)

- threads with a sockets server (threads-socket-server - use same client from last time - message-socket-client)

- Reviewed code from Lab 9:-

- stat: using the stat system call to query inodes

- scandir: alternative way to process directories

- fork: creating processes with fork() and exec()

- pipes: interprocess communication with anonymous pipes