-

Introduction

The aim of this chapter is to introduce typical mitigation tools and technique that are often used to prevent or detect attacks. The chapter focuses on concepts such as Defence in Depth, Patch Management and Access Control. The chapter then continues explaining the concept of firewalls and Intrusion Detection/Prevention Systems (IDPS).

-

Defence in Depth

Before covering any particular technology used in defending computer environments, it is important to discuss how these technologies should be deployed. One typical strategy used in modern networks is to adopt a layered approach or most often referred to as defence in depth. This is a concept that has been taken from military strategy, to ensure that an enemy is delayed while it advances, to allow for the defender to gain some time to reorganise.

In cybersecurity terms, defence in depth ensures that several layers of defence exist in a network or computer system infrastructure to make sure that if one layer is passed another one is presented to the attacker.

Considering that the ways of attacking a network are vast, an organisation should not rely on a single method of defence to secure their infrastructure. For instance, a firewall on its own is not enough to secure a network. If the firewall is compromised, so is the network. Additionally, if an attack is started from the inside, then a standard firewall is of no use. In this case, an IDS/IPS monitoring internal traffic provides a stronger defence.

In general terms, defence in depth is a strategy that aims at ensuring that an attack is detected before it can do some damage. The key is to ensure several and differentiated layers of defence. Each of the security layers should provide a different challenge to the attacker. This ensures that if an attacker is capable of bypassing one layer, they do not have the ability to immediately get past the subsequent layers.

Information Security Framework

The first step for an organisation to secure their assets is to develop an Information Security (IS) framework. This should include several areas of information security including the use of policies, standards and procedures as well as security incident management.

The overall idea behind a security framework is to make sure that everyone within an organisation is aware of and understands the requirements, their role and responsibilities to achieve information assurance. There cannot be security without everyone playing their part to achieve it.

Having said that, the overall responsibility for protecting the assurance of the asset(s) is given to one person (generally a senior manager) supported by a team. They should be ensuring that everyone plays their part to achieve the required level of security.

Generally, this is achieved with the writing of policies, standards and procedures, which provide, in very simple terms, a set of guidelines for physical, procedural and technical security measures.

While it is important to be aware of the existence of these, physical and procedural controls are outside the scope of this course. However, some technical areas should be considered and are introduced next.

-

Patch Management

Patching systems in the network is essential to provide security. You could have the best firewall and IDSs in place, but if workstations are running outdated or unpatched applications and operating systems, then it is all for nothing. During the module we have seen and used in several occasions exploits that grant full access to a system (Windows or Linux) just because an existing software patch had not been applied. The result of a poor patch management policy could be catastrophic.

Patch management involves obtaining, testing, and installing software patches in a computer system. Patch management is an important task that helps maintain current up to date software used in an organisation.

One important point to remember in the context of patch management, is that patches must be tested in a safe environment (i.e. not connected to the main network) before being deployed. This is to ensure that system patches do not render a system or network unstable.

System Hardening

System hardening refers to a process that has, as its overall idea, the aim of reducing of the “attack surface”. What this means in actual terms is simply making sure that a system performs a specific job (preferably a single job) and that no unnecessary services are running, it is fully patched and regularly checked, etc.

The list of tasks which needs to be completed to harden a system can be quite long, and varies according to the job the system performs, the operating system being used, the IS policy used in the organisation and much more.

-

Access Control

Access control is a security mechanism that attempts to ensure that only legitimate user can access specific resources. Access control relies on the AAA principles (authentication, authorisation and accounting).

Authentication

- Ensuring that the identity of a subject and resource is the one claimed. Authentication can be determined by:

- Something you know – The most typical example of this is a password.

- Something you are – This is biometrics, such as fingerprints, retina scan, etc.

- Something you have – This could be a token such as an ID card.

When one of the above methods is used (e.g. password), authentication is defined as single-factor. When two or more of the methods above are used (e.g. bank card with a pin number) authentication is defined as dual-factor and multi-factor respectively.

Authorisation

After the user is authenticated, authorisation services determine which resources the user can access and which operations the user is allowed to perform. This could be from simply access to a file or directory or the amount of space allocated in a network drive.

Accounting

Tracking and keeping a log of actions taken by an authenticated user is referred to as accounting. Accounting records what users do, including what they access, the amount of time the resource is accessed, and any changes that were made. Effectively, accounting keeps track of how network resources are used.

Access Control Models

- Different models of access control exist. Some of them are briefly describe below:

- Discretionary Access Control, or DAC, where the owner of an object has the capability to specify which subjects can access the object. In other words, access to resources by other subjects is granted by the owner. A very common form of access control used by most operating systems, such as Windows, Linux and Macintosh.

- Mandatory Access Control, or MAC, where the system specifies who or what can access the object. This form of access control is implemented in Security-Enhanced Linux (SELinux)

- Role Based Access Control, or RBAC, where access to resources is granted based on the roles of subjects within an organisation. This method of access control has gained large popularity and it often implemented in large enterprises.

-

Firewalls

Introduction

In this section we introduce the concept of firewalls. Firewalls are used to control access to an internal network from any connection coming from the Internet. Firewalls create a barrier between untrusted networks (i.e. Internet) and the internal network assumed to be trustworthy. Not having one leaves a network exposed to attacks; Network security would be entirely left to each internal host, making this scenario not secure and unmanageable.

The definition of firewall is non-standardised. It can cover anything from the running of appropriate software on a host, a gateway router operating with Access Control Lists (ACLs) on its interfaces, a router operating dedicated firewall applications, through to a bespoke firewall device such as a Cisco Adaptive Security Appliance (ASA), which operates additional security features such as Authorization, Authentication and Accounting (AAA). The term can even be extended to cover a group of devices offering a defence in depth to a network. A robust definition of firewall might be: an arrangement of devices and/or software set up to limit access to a network.

Requirements of a firewall

- The primary requirement of a firewall is to enforce the security policy of the network. The firewall monitors all traffic flowing into and out of the network so as to filter out disallowed traffic as defined by established rules. It can filter both incoming and outgoing traffic. Additional requirements include.

- A firewall should be resistant to attack. Denial of Service at the firewall will interfere with operation of the network, and if the firewall is compromised there is an increase in exposure to risks.

- Traffic needs to be forced to go through the firewall. There is no point in maintaining a top of the range firewall if there are alternative routes into the network which defeat the security goals of the firewall.

The Demilitarised Zone (DMZ)

- When considering the design and operation of firewalls we think of the gateway router (running a firewall) as being attached to three regions:

- Internal network;

- External network;

- Demilitarized zone (DMZ). (For a small company there may be no DMZ.)

This simple view can be enhanced by including a defence in depth which might include a dedicated firewall between the router and the internal hosts.

This three region design is called a three-pronged approach. A weakness of this approach is that a successful attack on the firewall breaches two networks!

Improvements on the basic design have outside/DMZ/inside in sequence. Also the DMZ can itself be partitioned into zones, limiting the effect of a successful attack.

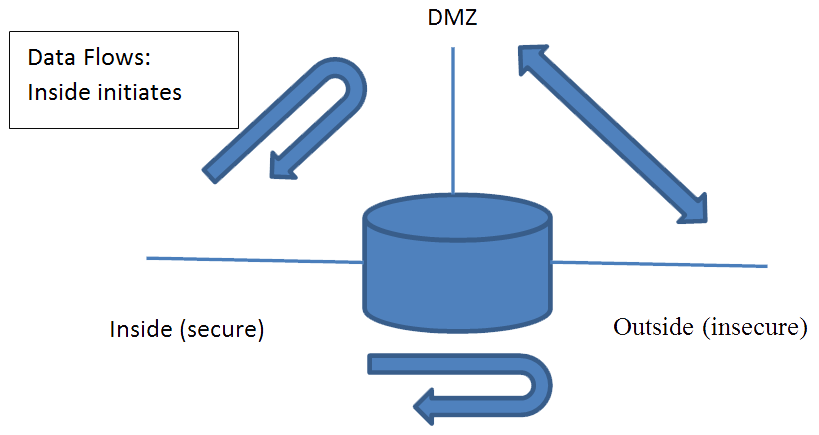

Data Flows

The data flowing between the internal, external and the DMZ areas are treated differently from the point of view of security. No data is allowed to cross from the external (insecure network) into the internal (secure network) unless it is in response to a conversation initiated from within the secure network.

The firewall is assumed to be operating on the gateway. Identified (allowed) packets are allowed to pass from the external network into the DMZ (this is necessary for the business operation of the company) and the DMZ sends its responses back out to the external network. Broadly – Traffic is not allowed from the DMZ to the private network except in response to conversations initiated from within the private network.

Traffic is allowed out of the private network to both the DMZ and the external network and responses are dynamically allowed from these regions back into the private network – other traffic is typically blocked.

Firewalls can also be set up between a company’s networks when there are differing security considerations relating to the operation of each of the networks.

Firewall Functionality

- Dedicated firewalls are multi-purpose devices that incorporate additional functionality such as AAA and intrusion prevention. If we stick to basic firewall considerations, then the functions that we expect a firewall will support are:

- The prevention of access to internal networks and services by untrusted external networks.

- The prevention of internal users and systems from accessing undesired external systems and services.

- The implementation of a company’s access control policies.

- The protection of the system from exploitation through known protocol weaknesses.

The user should recognise that simply applying a firewall is not sufficient protection to the system in itself. Some of the drawbacks of firewalls are that they add latency to traffic, and some applications may not be able to pass through without special considerations. Also, they are prone to configuration mistakes that can have serious consequences (ensure that there is a back-up and/or recovery plan).

Having a single firewall at the entrance to the network represents a single point of failure. A more secure approach to security is to consider a layered approach (defence in depth: NAT services, firewall device, routers running ACLs, software firewalls on hosts, etc.).

Firewall types

Static packet filtering: the standard and extended ACLs offer this type of defence. These are simple to implement and do not add much latency. Their weaknesses include that they are susceptible to IP spoofing and they are poor at handling fragmented packets.

Proxy servers: a server that sits between the edge router (say) and the true server, making it difficult to launch an attack against the true server. The proxy server can operate with packets up to layer 7 and is capable of detailed analysis of packets. These are also known as application layer gateways (ALG). There is a specialised version of a proxy server called SOCKS which simply operates by verifying the connection between the host and external is valid – no more.

[Note that proxy servers have the additional security advantage of hiding the inside hosts addresses from the outside.]

Stateful packet filtering: packets exiting the secure network are analysed by the stateful firewall and data concerning the session are stored in a stateful database. Return packets that are consistent with the state of the session (as determined by looking up the database) are allowed back into the secure network (other packets are dropped). This method offers protection against the DoS and spoofing attacks that are a weakness of the Static packet filters. But not all protocols offer a state analysis opportunity (for example UDP and ICMP)

Application inspection: these come in more complex varieties monitoring the states of ‘conversations’ flowing across the firewall all the way up to layer 7. It can allow the monitoring of dynamically generated ports that arise as part of a session (this occurs when an application protocol requires to open new ports for its operation; e.g. multimedia applications).

Transparent firewall: operating on a level 2 device (a device which is not operating between two IP addresses – as with the previous examples). A transparent firewall might operate on a switch even though it still operates by investigating at layer 3 and above. The transparent firewall can operate as any of the previously mentioned types – the distinction comes from the location at which it operates.

NAT: This is usually offered as a solution for IPv4 address exhaustion; but it is also a type of firewall (often found working in conjunction with some of the above methods). NAT makes it difficult for external devices to connect to the trusted devices on the inside.

Host based: these firewalls run as software on the host.

-

Intrusion Detection and Prevention Systems

Introduction

Firewalls are limited in their capabilities. For example, they cannot prevent certain malware entering the network. Additional approaches increasing the depth of the defences on a network should be added. Two examples are intrusion detections system (IDS) and intrusion prevention system (IPS) or IDPS as a general term covering both cases. The primary function of these systems is to monitor network traffic for anomalies.

IPS and IDS

Intrusion detection is an automated process monitoring traffic flowing into and out of the network, analysing it for signs of malicious activity, and then logging and signalling anything suspicious. The IDS does not take any corrective action - it requires other network components to do this when it has signalled an identified problem. On the other hand, the IPS does all the activities of the IDS but goes one step further and can take corrective action when an incident is identified.

An important difference in the operation of these two security ‘devices’ is that IDSs operates in parallel - using a wiretap - to the traffic coming into and out of the network and does not degrade network performance. IPSs operate inline (directly between the source and destination) and add overhead to network traffic.

- Problems that can be identified by IDPS include the presence of malware, the presence of hackers on the system and even abuse by authorised users. Not all incidents are malicious in nature and some might arise due to errors from users. Hence there are two types of errors that can be made by an IDPS:

- The false positive – reports an intrusion when none exist.

- The false negative – fails to identify a genuine threat.

IDPS vs Firewall

An IDPS is not a firewall. A firewall accepts or denies packets based on information carried by the packet. Whereas the IDPS base many of their decisions on the traffic flows, looking for anomalous behaviour across the flow. However, this distinction is becoming blurred with advances in firewalls.

A firewall cannot defend against abuse inside the network and will not modify packets. Firewall security is based on the concept of the network edge: the assets on the inside - the hackers on the outside. This paradigm is no longer effective as networks have become open.

The IDPS is generally positioned behind the firewall.

-

IDPS Deployment

Components of an IDPS

Sensor – the location (device) where the packets on the network are monitored. If the IDPS is host based the sensor is referred to as an agent. There may be more than one of these on a network.

Management Server – a centralised server that receives and evaluates information from the sensors. The server can correlate events triggered by multiple sensors. In very large networks there may be several servers, possibly operating in a hierarchy.

Database Server – the sensor’s memory would soon become overwhelmed if it had to maintain logs of activity for any length of time and typically a repository for information from the sensors is maintained

Console – software that interfaces between the IDPS system and network administrators, usually in the form of a GUI.

For simplicity in the following we do not distinguish the components when discussing the operation of the IDPS, assuming that the IDPS is a standalone device.

Working together

Note that IDS and IPS are not exclusive technologies. The fact that the IDS does not operate inline and hence does not affect network performance, means that it can carry out more depth in its packet analysis, allowing the IPS to remain focused on critical packet flows.

An advantage of IPS is that it can drop or ‘clean up’ suspicious packets; for example removal of suspect email attachments. It can also reconfigure firewalls to block a stream of traffic from a particular source that is identified as malicious. On the downside it can also become a bottleneck – all traffic needs inspection as part of its journey on the network.

We identify two types of IDPS: the host based and network based. A network based IDPS (NIDS, NIPS) may be configured on a router, an application specific device, or as part of a more general security device. Host based IDPS (HIPS and HIDS) are deployed on the host. These two approaches are not exclusive and can be used as part of a defence in depth strategy.

As well as the host and network based systems there are Wireless Intrusion Detection (Prevention) Systems (WID(P)S) and Network Behavioural Analysis (NBA) systems. These are not covered in this course.

IDPS Management

An IDPS should be deployed on a separate management network or VLAN. The former is preferred as the DoS on the network will interfere with the operation of the IDPS.

It is important that the IDPS is kept secure. If it is undermined it will be ineffective at stopping attacks and it may contain useful information to an attacker. Hence there should be a policy covering the monitoring and testing its performance, vulnerability assessments and implementing updates.

Access to the IDPS should be restricted to only those that need access.

IDPS require extensive tuning and customisation to be effective (reduce false positive and negatives). This involves the experimental determination of thresholds and black and white lists.

IDPS Operation

We briefly describe three modes of IDPS operation: signature based, anomaly based and stateful protocol analysis.

-

Modes of Operation

Signature Based Detection

Signatures are patterns of behaviour that are identified as a threat, which are stored in a file. The signature based system watches the traffic on the network to see if it has the pattern of the signature on file and, if so, reacts accordingly. As new exploits are discovered, their signatures are determined and recorded. Signature detection for IPS can be done by identifying individual exploits through their explicit signature (exploit-facing signature) or through the broader vulnerability-facing signatures.

Vulnerability facing signatures identify the many possible ways that a given vulnerability can be exploited by targeting the underlying vulnerability as opposed to the exploit. Thus, these signatures can provide protection against variants of an attack. This type of ‘signature’ is potentially capable of identifying attacks that are unknown!

These signatures are found to be more effective than the more basic exploit-facing signatures but are much harder to prepare and can be prone to false positives!

Anomaly Based Detection

Anomaly Based Detection looks at the statistical behaviour of the system and compares current values against previously defined ‘normal’ values, looking to identify discrepancies.

Statistical anomaly detection takes samples of network traffic at random and compares them to a pre-calculated baseline performance level. When identified network traffic activity is found to be either less than or greater than predefined statistical thresholds the IDPS takes appropriate action.

The collected statistical data can either relate to the collective operations on the network or to the individual behaviour of users. (Note a firewall cannot detect unacceptable practice on the inside of the network)

Stateful Protocol Analysis

Stateful protocol analysis monitors a session’s protocol states looking for deviations from that which is considered safe as defined in predetermined profiles.

Information about the evolution of the protocol states is maintained in a state table for the period of the connection.

The information contained in the state table could eventually swamp the resources of the IDPS. Note that ‘incomplete’ state evolution will eventually need to be written off. The time set aside for this is called the event horizon. The event horizon varies with the signature and represents a trade-off between the resources utilised and the likely time period over which the attack is likely to take place.

The term “protocol analysis” means that the IDS sensor analyses the traffic of identified protocols looking for suspicious behaviour. For each protocol, the analysis is based not only on protocol standards, particularly the RFC’s, but also on how things are implemented in the “real world”. Many implementations violate protocol standards, so it is very important that signatures reflect how things are really done, not how they are ideally done, or many false positives and false negatives will occur. Protocol analysis techniques observe all traffic involving a particular protocol and validate it, alerting when the traffic does not meet expectations.

Blacklists

IPS can maintain a list of proscribed port and IP addresses and file extensions, URLs that are known to support malicious attacks. These can then be denied if they appear on the network.

In contrast a whitelist can be maintained that recognises safe ports etc. For this to work efficiently it is best to operate the list on a per protocol basis.

-

Issues

The Problem of Cryptography

A particular problem for IDPS is the increasing use of cryptography that can conceal useful information. The IDPS should be placed before/after VPNs so as to ensure that they have a full chance to inspect data flowing into the network

Resources

The memory requirements of a device holding a signature database need to be considered. For the IPS the effect on traffic flow needs to be considered as well.

Maintenance

To effectively protect the network, the signature file must be updated regularly. The IDPSs must be checked to ensure that they are working correctly and that their security is maintained (IDPS, like firewalls, are a target for hackers, since their corruption can make their illicit activities harder to spot.)

The IDPSs are potential targets for denial of service attacks. These systems may have to reduce their effectiveness in the presence of large volumes of traffic, leaving the network exposed.

Honeypots

Honeypots is the term given to a device or an area of a network that is designed to attract attackers in order to study their behaviour, tools and techniques. They can also be designed as a method of decoy, to ensure that attention is not focused on more important assets. This would allow the defence team to react to an attack before damage is done.

The main issue with honeypots is that they have to look attractive but not so that attackers become aware of being observed or being distracted from more valuable assets.

-

Summary

In this lecture we introduced several techniques and technologies that can be used to protect a network against cyber-attacks. We have discussed several defence techniques such as defence in depth, access controls and patch management.

Technologies such as firewalls have also been introduced. Firewalls are a manifestation of the ‘security policy’ and can be implemented on any device that connects two networks and is viewed as network perimeter security.

Firewalls have limitations. Particular care needs to be taken to ensure that they cannot be bypassed. They offer little protection from an insider attack; and a simple firewall solution might represent a single point of failure. Their success is highly dependent on correct implementation of security: an improperly configured firewall is a hack waiting to happen.

We have also introduced IDPS, describing its components and methods of operation – contrasting these with firewalls. We focused on network based IDPS. In addition to these we mentioned the host based IDPS. These can be used in conjunction with network based to extend the depth of security on the network.

We have also briefly discussed honeypots. These are areas of a network designed to lure attackers so that they can be monitored to allow understanding of their behaviour.

-

References

[1] – J. Tyson, How Firewalls work, How stuff works. Available at: 🔗 http://computer.howstuffworks.com/firewall.htmt

[2] – R. Blair, A. Durai, Chapter 1: Types of Firewalls, Cisco Press, Network World. Available at: 🔗 http://www.networkworld.com/article/2255950/lan-wan/chapter-1--types-of-firewalls.html

[3] – K. K. Frederick, Network Intrusion Detection Signatures, Part One, Symantec, 2010. Available at: 🔗 http://www.symantec.com/connect/articles/network-intrusion-detection-signatures-part-one

[4] – K. K. Frederick, Network Intrusion Detection Signatures, Part Two, Symantec, 2010. Available at: 🔗 http://www.symantec.com/connect/articles/network-intrusion-detection-signatures-part-two

[5] – K. K. Frederick, Network Intrusion Detection Signatures, Part Three, Symantec, 2010. Available at: 🔗 http://www.symantec.com/connect/articles/network-intrusion-detection-signatures-part-three

[6] – K. Scarfone, P. Mell, Guide to Intrusion Detection and Prevention Systems (IDPS), National Institute of Standard and Technology, 2007. Available at: 🔗 http://csrc.nist.gov/publications/nistpubs/800-94/SP800-94.pdf