-

Overview

- Virtualization techniques & Hypervisors:

- binary translation, paravirtualization, hardware-assisted

- Type 2

- Type 1

- Containers

- Linux and Windows Containers

- Cluster Management (Orchestration)

Azure Web Apps

Resource Management Policies

admission control

capacity allocation

load balancing

energy optimisation

QoS guarantees

-

Virtualization

Virtual machines.

Cloud uses same basic concepts as virtualization on the desktop.

- on desktop provides convenient user experience e.g. other OS, drag & drop, firewalls, DHCP, NAT, sandboxed, elevated privileges, legacy software, user can configure and manage VMs...

- Presents logical view of physical resources.

- pool physical resources and manage them as a whole

- dynamically created at time of need

- not just OS instances but also storage, networking...

Hypervisors

What is a hypervisor?

A hypervisor, also called a virtual machine monitor (VMM), provides a virtualization layer which allows multiple operating systems to share a single hardware host.

Each operating system appears to have the host's processor, memory, and other resources all to itself.

- However, the hypervisor is actually:-

- controlling the host processor and resources,

- allocating what is needed to each operating system in turn and

- making sure that the guest operating systems (called virtual machines) cannot disrupt each other.

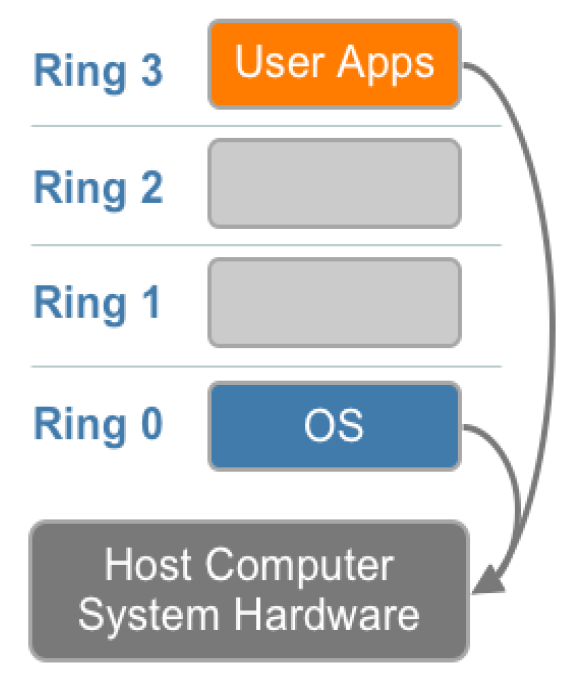

OS Protection Rings - Review

- The architecture of OSs & CPUs predates the cloud. To recap about x86 and x64 processors:-

- CPU modes – Ring 3 and Ring 0

- Ring 3 is for user mode processes – no direct access to I/O instructions etc.

- Ring 0 is for the kernel mode

- Rings 1 and 2 not usually used in mainstream OSs

- mode switch on system call execution and interrupts to kernel mode

OS Protection Rings - Virtualization

- This is problematic for virtualization:-

- where does the hypervisor go?

- how do we protect guests from one another and the host from the guests?

- the hypervisor should not run in the same privilege ring as guest kernels as there will be no protection between them

We could put the guest OS in Ring 1 or 2 (and thus the hypervisor goes in Ring 0) but this creates more issues: some privileged instructions e.g. I/O instructions, are not executable here and some behave differently.

- Various approaches:-

- binary translation

- paravirtualization

- hardware assisted virtualization

Binary Translation or “Full Virtualization”

User level instructions run unmodified at native speed.

Guest OS kernel does not need to be altered but runs in Ring 1.

- Guest kernel completely decoupled from the underlying hardware by the virtualization layer.

- hypervisor in Ring 0

- hypervisor will emulate a set of virtualised devices

- The hypervisor translates all guest privileged instructions on the fly to new sequences of instructions that have the intended effect on the virtualised devices.

- these new sequences of instructions run in Ring 1 and call the hypervisor

- performance penalty due to translation and longer code

- speeded up by caching translation results for reuse

Paravirtualization

Lower runtime virtualization overhead than binary translation.

Again user level instructions run unmodified at native speed.

- Guest kernel in Ring 1; hypervisor in Ring 0; again user level instructions run unmodified at native speed

However guest OS kernel modified to replace privileged instructions with hypercalls that communicate with the hypervisor.

- having to modify the kernel for specific hypervisors is not a good business option...

The hypervisor also provides hypercall interfaces for other critical kernel operations such as memory management, interrupt handling and time keeping.

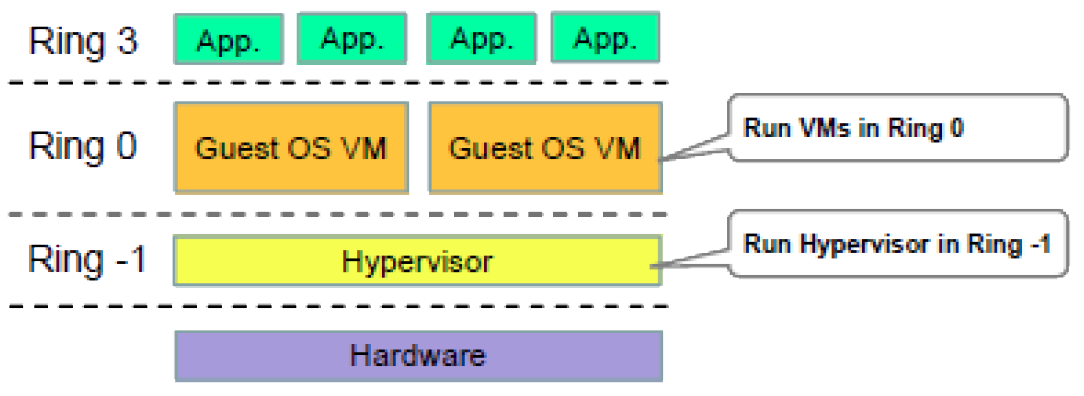

Hardware Assisted Virtualization

- Current generation CPUs now have hardware support :-

- hardware-assisted virtualization

- new privilege mode Ring -1 for hypervisor “above” Ring 0

- second-level address translation (SLAT): optimisations to allow guest VMs to more efficiently access host virtual memory tables

- e.g. Intel VT and AMD-V extensions

- Guest kernels will have been written to run in Ring 0 and here can be deployed unaltered.

- no need for binary translation

- hypercalls used only to execute admin/management functions of hypervisor

📷 Hardware Assisted Virtualization

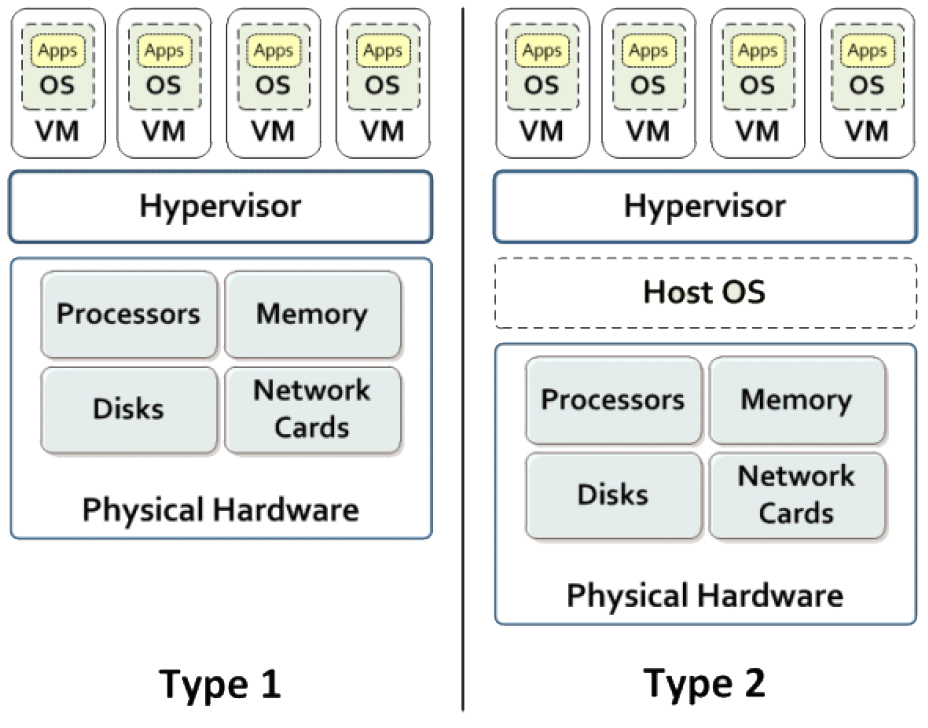

Hypervisors – Type 2

Sometimes called hosted or embedded hypervisors.

- Type 2 hypervisors are now confined to desktop virtualization software.

- e.g. VMWare Workstation, VMWare Fusion, VirtualBox

- full host OS installed and used

- attractive as a simple and clean user experience with simple VM installation and creation utilities

- really for desktops/laptops

- libraries of pre-built VM images available

- originally just used binary translation but some features of hardware assisted virtualization (memory management) now incorporated

VMWare Workstation components include a Ring 0 hypervisor and Ring 3 user application on the host.

- all installed on top of exiting host OS as a normal install

Some performance penalty due to having both host and guest kernels.

- this is a key issue for cloud deployment as they run with too much overhead

Almost universal hardware compatibility.

runs on anything that can run Windows or Linux as a host OS

Hypervisors – Type 1

- Used in the cloud – also called native or bare-metal hypervisors.

- no host OS kernel

- just hypervisor + guest kernels

- hardware assisted virtualization

- i.e. hypervisor in Ring -1

- Hyper-V, VMWare ESXi, KVM, XenServer...

Much faster than Type 2 as fewer software layers i.e. one kernel.

Not usually a full desktop experience – client version of Microsoft Hyper-V a key exception.

- Type 1 hypervisors really for servers

Live VM migration possible.

Needs separate management interface to manage VM lifecycle perhaps with privileged access.

No host OS to exploit so more secure.

Management more likely possible through scripts.

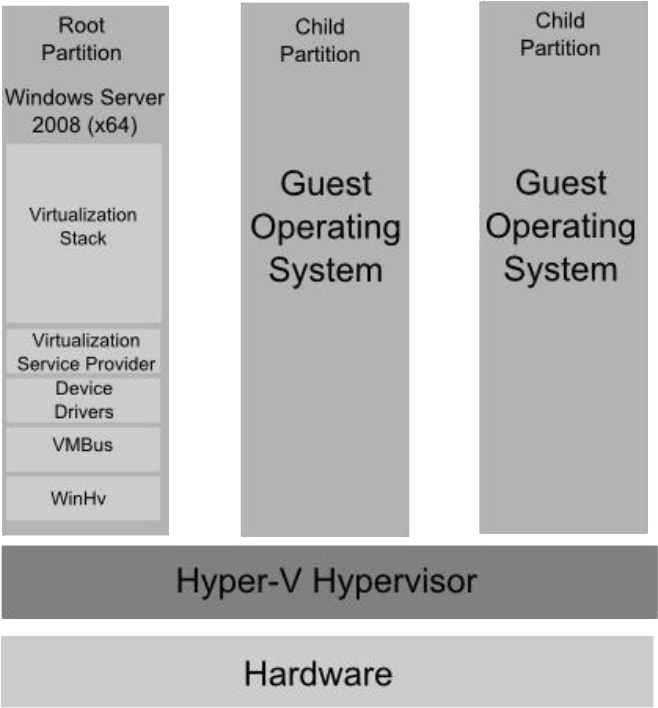

MS Hyper-V underpins Azure in data-centres.

Supported hardware more limited than Type 2.

- hypervisor inseparable from computer set up and will need full software rebuild to remove

- As is usual in this type of architecture Hyper-V introduces a management VM instance (“root partition”). This provides amongst other things:-

- Virtualization Stack to manage lifecycles of guest VMs etc.

- VMBus providing optimised communication between guest VMs (“partitions”)

Integrates with Windows Azure Services for Windows Server VM Cloud facilitating data-centre VM provisioning and management.

Microsoft Hyper-V however is also incorporated into current Windows desktop OSs as an optional feature for Type 1 desktop virtualization.

- smaller feature set than the server version

- its main use is for software testing and demonstration especially on different MS OS versions

Optimisations

In cloud VM instances may take 10 minutes to create and use lots of resources...

- Other options:-

- containers

- "super isolated processes"

- Linux and Windows Containers

- Azure Web Apps

- were Azure Web Sites until rebranding in Spring 2015

- an older and less generalised approach for IIS-based web applications

- Neither is an alternative to VMs, but rather an enhancement.

- each could be used with or without virtualization on a desktop/server

- used inside VMs in the cloud

- if used on VMs, VMs can now easily become multi-tenanted

X X

X X

X

-

Containers

Significantly smaller and more efficient than creating dedicated VMs.

anecdotally a server host utilising containers can effectively host 4-6 times more server instances than a dedicated VM approach

- Characterised by:-

- strong isolation at an OS process level

- fast start and lower overall resource requirements

- standard application packaging (if used in conjunction with a technology like Docker)

- one or more add-on management systems offering more features including packaging and deployment, clustering, orchestration, load balancing...

Containers - “The New Stack”

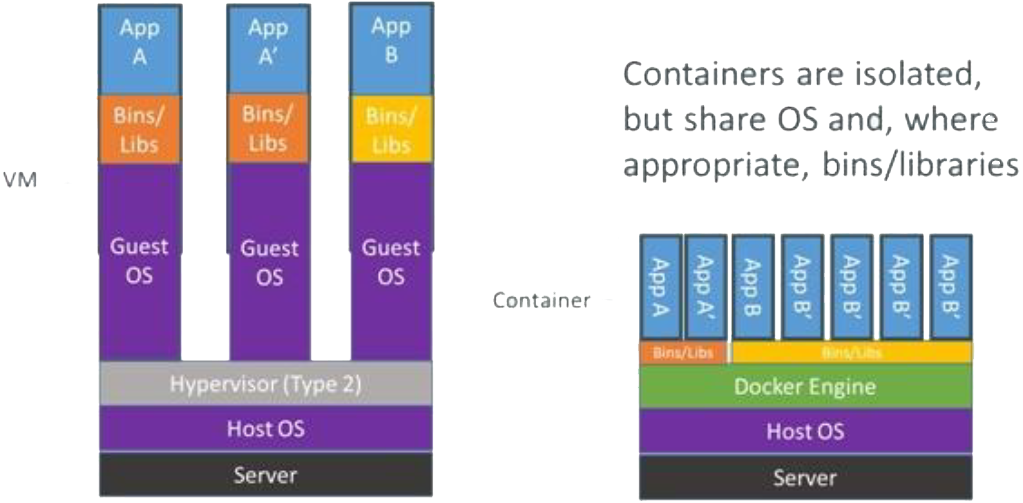

Containers vs. VMs

Containers

Containers run as sandboxed user processes sharing common kernel instance and common libraries with other containers. A container sees itself as its own independent system.

- Two current types running different OS's native applications:-

- Linux Containers (LXC)

- Windows Containers

- Windows 10 & Windows Server 2016

- 🔗 Linux vs. Windows Containers: What’s the Difference?

Containers are the enabling technology for microservices discussed in a later lecture.

- Two OS enhancements required to underpin containers:-

- to manage and monitor control of allocation of resources to containers e.g. CPU, memory, bandwidth etc.

- Linux Containers: Control Groups (Cgroups)

- Windows Containers: Windows Job Objects

- to isolate containers’ execution environments from each other. This allows containers to have their own process (and process id space), root file system, network devices (IP address etc.), user id space etc.

- Linux Containers: Kernel Namespaces

- Windows Containers: Object Namespaces

Docker

- Built on top of containers.

- a container management tool

- for managing both Linux and Windows Containers

- Ready ro run "packaged-up" applications.

- portable deployment across machines by offering an abstraction of machine-specific settings

- bundle all dependencies together

- can be run on Linux distribution or Windows Server instance as appropriate in a virtualized sandbox without the need to make custom builds for different environments

Provides development tools to assemble container from source code with full control over application dependencies.

Also provides reuse, versioning (similar to git), sharing, API...

Cluster Management (Orchestration)

VM resource allocation under control of cloud vendor infrastructure and is largely opaque to developer.

see Resource Management slides later

Container resource allocation in contrast has evolved with container technology and there are lots of competing add-on options.

different sets available as services by different cloud vendors

see summary table below

- Features include (and vary):-

- describe and deploy/delete containers given a description (YAML) of application

- manage deployments (run rolling updates, replace a current application Docker image individually or in batches)

- create services

- scale deployments up and down (load balancers)

- health monitoring and recovery

- live (hot) migration

- metrics, persistence, resource sharing and security

- service discovery

- monitor application

Name Backing Company Type Community version limitations Recommended use case Swarm Docker Container orchestration and scheduling None Small Cluster, simple architectures.

No multi-user. For small teams.Nomad HashiCorp Container orchestration and scheduling No web UI If already using HashiCorp Consul or other service discovery tools.

No multi-user. For small teams.Kunernetes Google Container orchestration and scheduling None Production-ready, recommended for any type of containerised environments, big or small, very feature rich. Rancher Rancher Labs Infrastructure management and container orchestration None Production-ready, recommended for any type of containerised or mixed environments (standalone applications can be run with Mesos that plugs into Ranchers).

Recommended for multi-cloud and multi-region clusters.DC/OS Mesosphere Infrastructure management and container orchestration No multi-user authentication.

No role-based access control.

No secret management

No extra monitoring tools.Enterprise version can handle any type of containerised or mixed environments.

Recommended for multi-cloud and multi-region clusters.

Community version is very limited and is not recommended for production, only for POC and evaluation purposes.🔗 Scaling Out StreamSets With Kubernetes

also MS Service Fabric & Azure Container Service

🔗 Overview of Azure Service Fabric -

Resource Management

Generally means using cloud infrastructure as efficiently as possible to allow cloud application deployed as VMs to complete whilst adhering to it SLA.

- Includes:-

- VM instance and data provisioning i.e. provider prepares appropriate resources at start of service

- VM migration mid-execution

- feedback monitoring to oversee performance

- graceful scaling (up and down) of number of VM instances i.e. dynamic provisioning

- fault detection and recovery

- SLA monitoring and logging of SLA violations

- violations may entail a refund or penalty payment to customer

Cloud resource management policies can be split into 5 classes:-

1.Admission Control

2.Capacity Allocation

3.Load Balancing

4.Energy Optimisation

5.QoS Guarantees

Some of this is adapted from operating systems theory.

🔗 Chapter 6 - Cloud Resource Management and Scheduling

Will concentrate on first 3.

Admission Control

Admission may be disallowed if it prevents work in progress completing or already contracted.

Difficult in large distributed systems in which knowing the global state of system at any instant is difficult.

- Often the parameter used for admission control policy is the current system load

- e.g. once a 80% load threshold is reached the cloud platform stops accepting additional work

- however estimation of the current system load is likely to be inaccurate as system is large, dynamic and distributed

Capacity Allocation

Allocates resources for individual instances.

- automated self-management approach

Several approaches including those based on control theory and machine learning techniques.

Control theory uses closed feedback measurement loops to monitor system performance and stability with respect to various set parameters including QoS objectives.

Load Balancing

- In this context need to concentrate work on a minimum set of servers rather than spreading work equally.

- to limit cost

- switch rest to standby so related to energy optimisation