-

Dynamic Vs Static

Static and dynamic analyses are two of the most popular types of code security tests. Before implementation however, the security-conscious enterprise should examine precisely how both types of test can help to secure the SDLC. Testing, after all, can be considered an investment that should be carefully monitored.

Static

Static analysis is performed in a non-runtime environment. Typically a static analysis tool will inspect program code for all possible run-time behaviors and seek out coding flaws, back doors, and potentially malicious code.

Dynamic testing

Dynamic analysis adopts the opposite approach and is executed while a program is in operation. A dynamic test will monitor system memory, functional behavior, response time, and overall performance of the system. This method is not wholly dissimilar to the manner in which a malicious third party may interact with an application.

Having originated and evolved separately, static and dynamic analysis have, at times, been mistakenly viewed in opposition.

Strengths and Weaknesses of Static and Dynamic Analyses

Static analysis is a thorough approach and may also prove more cost-efficient with the ability to detect bugs at an early phase of the software development life cycle. For example, if an error is spotted at a review meeting or a desk-check –it can be relatively cheap to remedy. Had the error become lodged in the system, costs would multiply.

Static analysis can also unearth future errors that would not emerge in a dynamic test. Dynamic analysis, on the other hand, is capable of exposing a subtle flaw or vulnerability too complicated for static analysis alone to reveal and can also be the more expedient method of testing. A dynamic test, however, will only find defects in the part of the code that is actually executed.

The enterprise must weigh up these considerations with the complexities of their own situation in mind. Application type, time, and company resources are some of the primary concerns.

-

When to Automate Application Security Testing

While static and dynamic analysis can be performed manually they can also be automated. Used wisely, automated tools can dramatically improve the return on testing investment. Automated testing tools are an ideal option in certain situations. For example, automation may be used to test a system’s reaction to a heavy volume of users or to confirm a bug fix works as expected. It also helps to automate tests that are run on a regular basis during the SDLC.

As the enterprise strives to secure the SDLC, it must be noted that there is no panacea. Neither static nor dynamic testing alone can offer blanket protection. Ideally, an enterprise will perform both static and dynamic analyses. This approach will benefit from the synergistic relationship that exists between static and dynamic testing.

Automated Security Testing for Developers

Security tests are just like any other kinds of tests — except that most of them don’t verify predefined behaviour. Instead, they check for the absence of certain behaviours and weaknesses known to lead to security risks.

- As a part of the continuous integration and running tests repeatedly, security tests allow making code free from:

- memory bugs,

- input bugs,

- performance-hindering issues,

- insecure behaviour,

- undefined behaviour.

However, before the rise of continuous integration, writing and implementing such tests wasn’t everyone’s idea of a great resource allocation even within the security engineering community (although performing security checks manually is an example of an even worse time expenditure). These days, when security-related scares and threats arise repeatedly, writing such tests should become everyone’s favourite hobby because every piece of software contributes to the overall security or the lack thereof.

Security testing 101

A good place to start with security testing is to find out what security controls are actually present in your product (application, website, etc.). The next step is gaining an understanding of how these controls are affected by execution flow and input data, whether it can be altered, or is it possible for the expected behaviour to fail unexpectedly.

The process of security testing can be divided into 4 categories:

1. Functional security tests that verify that security controls of your software work as expected.

2. Non-functional tests against known weaknesses and faulty component configurations (i.e. usage of crypto that is known to be weak, source code analysis for memory leaks and undefined behaviour, etc.).

3. Holistic security scan — when an application or infrastructure is tested as a whole.

4. Manual testing and code review — sophisticated work that still cannot be quite algorithmised and delegated to machines so it requires human attention.

-

Testing security controls

Testing security controls and security components boils down to making sure that security controls behave as expected under chosen circumstances. Examples include active/passive attack on API calls wrapped in HTTPS, passing SQL injection patterns into user input, manipulating parameters to mount path traversal attacks, etc.

- Some of the helpful open-source tools for such testing are:

- BDD-security suite used as a testing framework for functional security testing, infrastructure security testing, and application security testing.

- Gauntlt, a number of Ruby hooks to security tools to integrate into your CI infrastructure.

- OWASP Zap and OWASP Zapper (Jenkins plugin) allow automating attack proxy to test some of the possible attacks.

- Mittn, F-Secure’s security testing tooling for CI.

Testing memory behaviour

Buffer overflows and remote code execution are among the most dangerous and damaging security issues your code might create. Detecting memory problems, unidentified behaviour and other glitches that lead to attacks on execution flow can be automated through source code analysis. Most of the warnings to look at are memory leaks, buffer sizes, and such.

Fuzzing to find vulnerabilities

“Never trust your input” is one of the cardinal rules of computer programming. And fuzzing is an automated process in software testing that takes advantage of this rule and searches for exploitable bugs through feeding random, invalid, and unexpected inputs to the tested software.

The data provided by the fuzzer is just “ok” enough for the parser not to reject it, but is rubbish otherwise and the result of feeding it into the tested software can lead to quite unexpected outcomes. This helps surface the vulnerabilities that would be undetectable otherwise. Another advantage of fuzzing is that this kind of testing is almost fully devoid of false positives (which quite often take place with static analysers).

Security-wise, fuzzing is both about testing the security controls and testing memory behaviour, as bugs like famous Heartbleed (which combines both poor security controls and unexpected memory behaviour) could’ve been fuzzed easily.

For security testing, fuzzing is especially useful when it comes to feeding into the app such pseudo-valid inputs that cross the trust boundary. A trust boundary violation takes place when the tested app is made to trust the unvalidated data fed into it. This approach mimics an adversary trying to feed malicious content into the app in the hope of achieving privilege escalation or plain malfunction, crash, etc.

All fuzzing approaches will find vulnerabilities, and the more fuzzing is done — the better. However, to get fuzzing done right, you need to have at least a general idea of what you’re trying to accomplish. Fuzzing tests need to be well thought out, well planned, and well written. Fuzzing is also affected by the execution environment (the ripple effect), configuration, and capabilities of the test suite. It will not be a magic bullet for all your automated security testing, but it will take you far — but only as far as you’re willing to invest time and effort into preparation.

Testing larger entities and high-level behaviour

Apart from actually testing the code you write, it’s useful to test larger entities: whole services and infrastructural components. It is important when the product you’re developing consists of many high-level entities, micro-services, and holds many autonomous dependencies.

There are vulnerability scanners that also help with automating security testing through scanning your websites and/or network for a huge number of known risks. The result of such testing is usually a list of vulnerabilities detected in your infrastructure and recommendations on how they can be patched or otherwise secured. Sometimes the patching process can also be performed by the automatic vulnerability scanners.

This is specifically relevant for software that is being composed, rather than top-down written, which consists of many services, libraries, and chunks of code. Some of the popular free network vulnerability scanners include Open Vulnerability Assessment System (OpenVAS), Microsoft Baseline Security Analyzer, Nexpose Community Edition, Retina CS Community, SecureCheq.

It is worth noting that infrastructures should be checked when they are complete and functional (live or near-live) for the maximum impact and usefulness of the check-up.

Performance testing for security purposes

Performance testing is something that doesn’t really spring to mind in the context of “security testing”, but performance reliability is really the first step towards ensuring a safe and secure functioning of a system. One of the risks to consider is a denial of service caused by an overload or by (D)DoS-type attacks. Regardless of the possible cause, it is necessary to have an exact estimation of the future calculated load level and the point beyond which the denial of service happens, at the moment when the system is designed.

Despite the lack of a single yardstick for different systems, it is possible to get useful testing results. If they are recorded and published along with the characteristics of the testing platform, they will help the system architects. Running performance tests on the target equipment after the installation and configuration of the software will also yield more precise the threshold levels. This, in turn, will help to configure the load-limiting and alert systems accordingly.

-

How Artificial Intelligence Can Improve Pentesting

686 cybersecurity breaches were reported in the first three months of 2018 alone, with unauthorized intrusion accounting for 38.9% of incidents. And with high-profile data breaches dominating headlines, it’s clear that while modern, complex software architecture might be more adaptable and data-intensive than ever, securing that software is proving a real challenge.

Penetration testing (or pentesting) is a vital component within the cybersecurity toolkit. In theory, it should be at the forefront of any robust security strategy. But it isn’t as simple as just rolling something out with a few emails and new software – it demands people with great skills, as well as a culture where stress testing and hacking your own system is viewed as a necessity, not an optional extra.

This is where artificial intelligence comes in – the automation that you can achieve through artificial intelligence could well help make pentesting much easier to do consistently and at scale. In turn, this would help organizations tackle both issues of skills and culture, and get serious about their cybersecurity strategies.

This is where artificial intelligence comes in – the automation that you can achieve through artificial intelligence could well help make pentesting much easier to do consistently and at scale. In turn, this would help organizations tackle both issues of skills and culture, and get serious about their cybersecurity strategies.

The shortcomings of established methods of pentesting

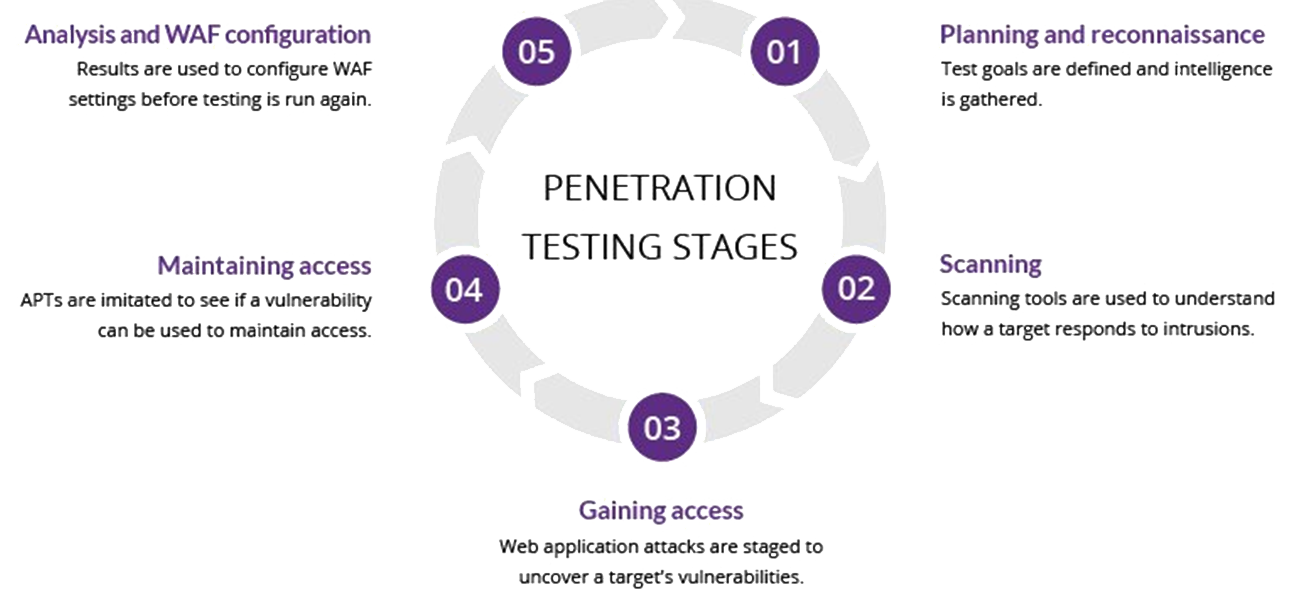

Typically, pentesting is carried out in 5 stages:

Source: Incapsula Every one of these stages, when carried out by humans, opens up the chance of error. Yes, software is important, but contextual awareness and decisions are required.

This process, then, provides plenty of opportunities for error. From misinterpreting data – like thinking a system is secure, when actually it isn’t – to taking care of evidence and thoroughly and clearly recording the results of pentests, even the most experienced pentester will get things wrong.

But even if you don’t make any mistakes, this whole process is hard to do well at scale. It requires a significant amount of time and energy to test a piece of software, which, given the pace of change created by modern processes, makes it much harder to maintain the levels of rigor you ultimately want from pentesting.

This is where artificial intelligence comes in.

The pentesting areas that artificial intelligence can impact

Let’s dive into the different stages of pentesting that AI can impact.

#1 Reconnaissance Stage

The most important stage in pentesting is the Reconnaissance or information gathering stage. As rightly said by many in cybersecurity, “The more information gathered, the higher the likelihood of success.” Therefore, a significant amount of time should be spent obtaining as much information as possible about the target.

Using AI to automate this stage would provide accurate results as well as save a lot of time invested. Using a combination of Natural Language Processing, Computer Vision, and Artificial Intelligence, experts can identify a wide variety of details that can be used to build a profile of the company, its employees, the security posture, and even the software/hardware components of the network and computers.

#2 Scanning Stage

Comprehensive coverage is needed in the scanning phase. Manually scanning through thousands of systems in an organization is not ideal. Nor is it ideal to interpret the results returned by scanning tools. AI can be used to tweak the code of the scanning tools to scan systems as well as interpret the results of the scan. It can help save pentesters time and help in the overall efficiency of the pentesting process.

AI can focus on test management and the creation of test cases automatically that will check if a particular program can be tagged having security flaw. They can also be used to check how a target system responds to an intrusion.

#3 Gaining and Maintaining access stage

Gaining access phase involves taking control of one or more network devices in order to either extract data from the target, or to use that device to then launch attacks on other targets. Once a system is scanned for vulnerabilities, the pentesters need to ensure that the system does not have any loopholes that attackers can exploit to get into the network devices. They need to check that the network devices are safely protected with strong passwords and other necessary credentials.

AI-based algorithms can try out different combinations of passwords to check if the system is susceptible for a break-in. The algorithms can be trained to observe user data, look for trends or patterns to make inferences about possible passwords used.

Maintaining access focuses on establishing other entry points to the target. This phase is expected to trigger mechanisms, to ensure that the penetration tester’s security when accessing the network. AI-based algorithms should be run at equal intervals to time to guarantee that the primary path to the device is closed. The algorithms should be able to discover backdoors, new administrator accounts, encrypted channels, new network access channels, and so on.

#4 Covering Tracks And Reporting

The last stage tests whether an attacker can actually remove all traces of his attack on the system. Evidence is most often stored in user logs, existing access channels, and in error messages caused by the infiltration process. AI-powered tools can assist in the discovery of hidden backdoors and multiple access points that haven’t been left open on the target network; all of these findings should be automatically stored in a report with a proper timeline associated with every attack done.

A great example of a tool that efficiently performs all these stages of pentesting is CloudSEK’s X-Vigil. This tool leverages AI to extract data, derive analysis and discover vulnerabilities in time to protect an organization from data breach.

-

Manual vs automated vs AI-enabled pentesting

Now that you have gone through the shortcomings of manual pen testing and the advantages of AI-based pentesting, let’s do a quick side-by-side comparison to understand the difference between the two.

Manual Testing Automated Testing AI enabled pentesting Manual testing is not accurate at all times due to human error This is more likely to return false positives AI enabled pentesting is accurate as compared to automated testing Manual testing is time-consuming and takes up human resources. Automated testing is executed by software tools, so it is significantly faster than a manual approach. AI enabled testing does not consume much time. The algorithms can be deployed for thousands of systems at a single instance. Investment is required for human resources. Investment is required for testing tools. AI will save the investment for human resources in pentesting. Rather, the same employees can be used to perform less repetitive and more efficient tasks Manual testing is only practical when the test cases are run once or twice, and frequent repetition is not required. Automated testing is practical when tools find test vulnerabilities out of programmable bounds AI-based pentesting is practical in organizations with thousands of systems that need to be tested at once to save time and resources. AI-based Pentesting Tools

Pentoma is an AI-powered penetration testing solution that allows software developers to conduct smart hacking attacks and efficiently pinpoint security vulnerabilities in web apps and servers. It identifies holes in web application security before hackers do, helping prevent any potential security damages.

Pentoma analyzes web-based applications and servers to find unknown security risks. In Pentoma, with each hacking attempt, machine learning algorithms incorporate new vulnerability discoveries, thus continuously improving and expanding threat detection capability.

Wallarm Security Testing is another AI based testing tool that discovers network assets, scans for common vulnerabilities, and monitors application responses for abnormal patterns.

It discovers application-specific vulnerabilities via Automated Threat Verification. The content of a blocked malicious request is used to create a sanitized test with the same attack vector to see how the application or its copy in a sandbox would respond.

With such AI based pentesting tools, pentesters can focus on the development process itself, confident that applications are secured against the latest hacking and reverse engineering attempts, thereby helping to streamline a product’s time to market. Perhaps it is the increase in the number of costly data breaches or the continually expanding attack and proliferation of sensitive data and the attempt to secure them with increasingly complex security technologies that businesses lack in-house expertise to properly manage.

Whatever be the reason, more organizations are waking up to the fact that if vulnerabilities are not caught in time can be catastrophic for the business. These weaknesses, which can range from poorly coded web applications, to unpatched databases to exploitable passwords to an uneducated user population, can enable sophisticated adversaries to run amok across your business. It would be interesting to see the growth of AI in this field to overcome all the aforementioned shortcomings.